Evals Aren’t Enough: Reliable Agents Need Simulation

Scroll to begin

AIagentsdon’tfailbecausetheycan’tansweraquestion.Theyfailbecausethey’resystems:stochastic,stateful,tool-usingpipelinesthatunfoldovertime.Anagentcanscorewellonanevaluationdatasetandstillbreakwhenit’sdroppedintoarealworkflow.Thisisn’taknockonevals—it’sastatementaboutwhatevalsarestructurallyabletomeasure,andwhattheycan’t.

The Evaluation Dataset Is Not the Workflow

Classic eval thinking assumes a bounded world: a dataset of tasks, a spec, a grader, a pass/fail. You run the suite, watch the number go up, and assume reliability improved. But agent failures are rarely “wrong answer” failures. They are trajectory failures—errors that emerge only after multiple steps.

The agent makes an early assumption that later becomes inconsistent. Tool outputs vary due to timeouts, schema changes, or partial results. The agent’s plan drifts as context grows and gets summarized. Small deviations compound into the wrong end state. A dataset-style eval typically captures snapshots of behavior, while real workflows expose dynamics.

Key insight from Section IThat mismatch is why “passing one step” does not equal “validating the system.” Anthropic’s engineering guidance reflects this systems reality: agent-specific design, multiple trials, and operational framing—because the “same” task can play out differently across runs.

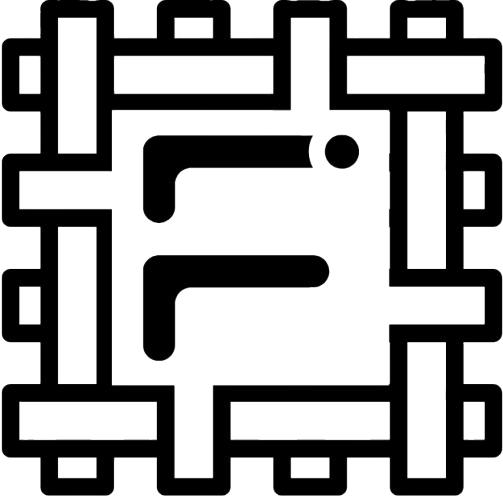

Three layers of agent reliability — evals, observability, and simulation

Why Evals Fail to Predict Reliability

The core problem is that evals often test outcomes without faithfully modeling the process that produces them. Agents are non-deterministic decision pipelines. If you measure success on a finite dataset, you can miss the long-tail failure modes that only appear when the agent explores different paths, tools return unexpected shapes, or the environment pushes back.

Real environments are richer than task definitions. In production, “the environment” includes API behaviors, tool contracts, latency, partial failures, auth edge cases, and messy user inputs. Even if your dataset covers the “right” tasks, it is hard to cover the right interactions at the right moments.

Key insight from Section IIThis is why recent research is shifting attention from “better graders” toward environment modeling—because robustness comes from exposure to diverse trajectories and feedback, not only scoring more examples.

Simulation Is the Missing Layer

Simulation flips the question from “Did the agent pass this dataset?” to “Does the agent behave correctly across the space of realistic workflows?” A simulation environment can produce multi-step, stateful scenarios rather than isolated examples, generate counterfactuals and perturbations, support deterministic replay for debugging and regression, and stress-test long-horizon behavior where drift and compounding errors actually appear.

Crucially, simulation creates a place to optimize, not just measure. One reason the eval-only approach stalls is that it tells you that something failed but not reliably why, and it doesn’t give you a controllable environment to iterate. Simulation gives you an engine to diagnose, fix, and replay.

Key insight from Section IIIResearch has started to formalize this: instead of hand-building brittle testbeds, you can use models to simulate environment feedback and generate diverse trajectories for training and improvement—explicitly treating “the environment” as a first-class object.

Evals Are Becoming Infinite Observability

A visible trend in the agent tooling ecosystem is that many eval platforms are converging toward always-on tracing: capture every prompt, tool call, intermediate step, cost, latency, and outcome—then slice it endlessly. The motivation is real: if agents fail across trajectories, you want full-fidelity traces to debug them.

But most of this “new eval stack” is essentially observability infrastructure—and increasingly it is built on or compatible with OpenTelemetry. LangSmith supports exporting traces via OpenTelemetry. Arize Phoenix ships OTEL wrappers for LLM and agent tracing. Major observability vendors now ingest GenAI traces via OpenTelemetry semantic conventions, reinforcing OTEL as the lingua franca.

Key insight from Section IVThis is good news for interoperability: traces can move between tools and vendors, and teams don’t have to re-instrument everything. But it does not solve the core reliability gap—because observability is fundamentally post-hoc. It helps you understand failures after the agent experiences real conditions, often after users do. If you want trustworthy agents, you need a layer that catches failures before deployment, in a controlled environment that can be replayed and optimized.

Where Fabrik Fits

Fabrik is built around a simple premise: you can’t trust agents without simulating how they operate. Evals remain useful as a starting point and as guardrails, and observability is essential once you ship. But reliability—the kind that prevents silent churn and compounding workflow failure—requires a simulation layer that tests agents as systems, not as single outcomes on a dataset.

That is the gap Fabrik exists to fill: simulate real workflows pre-production, surface hidden failure modes, and provide deterministic replay so teams can ship agents with evidence—not hope.

Key insight from Section VThe three layers work together. Evals give you a baseline. Observability gives you production insight. Simulation gives you pre-deployment confidence. Without all three, you are flying blind at one stage or another. Fabrik ensures the simulation layer is no longer the missing piece.

Agent failures are trajectory failures—errors that emerge across multiple steps, not wrong answers to single questions.

Evaluation datasets test outcomes without modeling the process, missing long-tail failures that appear only under diverse execution paths.

Simulation flips the question from “did the agent pass?” to “does it behave correctly across realistic workflows?”—enabling optimization, not just measurement.

The eval tooling ecosystem is converging on OpenTelemetry-based observability, which is valuable but fundamentally post-hoc.

Reliability requires three layers working together: evals for baseline, observability for production insight, and simulation for pre-deployment confidence.

References: Anthropic, “Demystifying evals for AI agents” (Jan 2026). arXiv:2511.01824, “Simulating Environments with Reasoning Models for Agent Training.” LangSmith OpenTelemetry tracing documentation. Arize Phoenix OTEL tracing setup. OpenTelemetry semantic conventions for GenAI.Research Methodology