The Fabrik Architecture: Building Trustworthy AI Agents

Scroll to begin

AIagentsfailinwaysthattraditionaltestingcannotpredict.Multi-stepworkflowscreateemergentfailuremodesinvisibletocomponent-levelevaluation.Fabrikaddressesthisthroughacompletearchitecturespanningpre-productionsimulationtoproductionmonitoring—deterministicisolation,toolinterception,contextengineering,adversarialgeneration,traceanalysis,anddriftdetectionworkingasanintegratedsystemtocatchfailuresbeforeusersexperiencethem.

System Overview

Agent failures are emergent properties of multi-step workflows under variable conditions. A customer service agent might succeed at each individual step—retrieving account data, analyzing the issue, drafting a response—yet produce an incorrect outcome because context degrades between steps, or because an edge case triggers unexpected tool behavior, or because adversarial input exploits a prompt weakness.

Traditional testing approaches evaluate components in isolation. They test individual LLM calls, verify single tool invocations, check discrete logic branches. This misses the interaction effects that define real agent behavior. The system as a whole exhibits failure modes invisible when examining its parts separately.

Key insight from Section IFabrik is architected as a complete pipeline from pre-production simulation through production monitoring. Six integrated subsystems work together: deterministic isolation enables reproducible testing, tool mocking provides controlled environments, context engineering prevents information loss, adversarial generation stress-tests boundaries, trace analysis detects silent failures, and drift monitoring catches degradation over time.

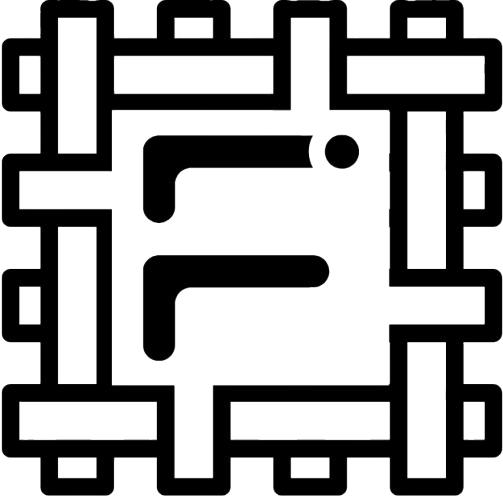

Six subsystems forming the Fabrik trust layer

Isolation & Interception

Reproducible testing requires eliminating all sources of non-determinism. Fabrik instantiates each agent in an ephemeral container with kernel-level isolation. Network access is blocked except for a controlled loopback interface. The container filesystem is wiped after each run. Seed values fix any remaining stochastic processes. No external entropy can contaminate results.

Tool mocking occurs at three possible layers, each with distinct tradeoffs. Network-level interception provides complete isolation but loses visibility into call semantics—you see bytes on the wire, not function calls with typed parameters. Function-level mocking preserves semantics but requires invasive code modification that invalidates test fidelity. Library-level interception provides the optimal balance: SDK client constructors are wrapped to inject mock implementations, preserving the full call interface while enabling deterministic response injection. The agent code remains completely unmodified.

Key insight from Section IIResponse synthesis requires careful calibration. Mock responses must be realistic enough to exercise actual agent behavior patterns. Production traffic analysis generates statistically representative response distributions. Edge cases are synthesized through systematic perturbation: adding latency spikes, injecting transient errors, returning boundary values. This reveals how agents handle conditions that happy-path testing never encounters. Deterministic replay queues map each request to a predetermined response, enabling exact reproduction of complex multi-turn interactions where agent decisions depend on accumulated context from previous steps.

Workflow Execution

Multi-step workflows present a fundamental context problem. Information must flow between steps—the account retrieval step passes customer data to the analysis step, which passes conclusions to the response generation step. Without deliberate engineering, this context degrades through three mechanisms: truncation when context exceeds token limits, dilution when verbose outputs bury critical details, and corruption when state transforms incorrectly during transfer.

Fabrik enforces schema-validated context structures. Each workflow step declares its required inputs and guaranteed outputs as typed schemas. Transitions validate that context contains all required fields with correct types before allowing execution to proceed. This transforms implicit context passing into explicit, auditable data flow. The schema acts as a contract—upstream steps must provide what downstream steps require.

Key insight from Section IIICheckpoint validation occurs between every step. The framework compares actual context state against the expected schema, checking not just that required fields exist but that values fall within expected ranges and maintain semantic coherence with prior steps. Violations trigger immediate alerts rather than propagating corrupted state forward. For critical workflows, human-in-the-loop escalation pauses execution and requests manual intervention, ensuring high-stakes processes never proceed with incomplete information.

Safety & Robustness

Production agents face inputs deliberately crafted to exploit weaknesses. Prompt injections attempt to override system instructions. Malformed data structures trigger parsing failures. Semantic edge cases fall outside normal distributions. Traditional evaluation optimizes for average-case performance on curated test sets, missing the adversarial failure modes most likely under attack.

Fabrik employs red-team LLMs specifically trained to generate adversarial scenarios. The system produces poisoned prompts, boundary-condition inputs, and semantic edge cases at scale. Each generated input receives tags indicating its attack vector category and expected failure mode, enabling systematic coverage analysis. Generation ensures diversity across attack types while maintaining realistic input structures—the adversaries must be plausible enough that standard input validation would accept them.

Key insight from Section IVTrace-based failure detection addresses the silent failure problem. Agents can produce plausible but incorrect outputs that evade standard monitoring because they trigger no exceptions and generate well-formed responses. Complete execution traces capture full context state at each step, all tool calls with parameters and responses, and intermediate reasoning outputs. Automated analysis compares executions against expected patterns derived from successful runs. Deviations surface silent failures—hallucinated data, context confusion, semantic drift—that would otherwise reach users. Traces enable deterministic replay, allowing engineers to step through failed executions and identify the exact divergence point.

Production Continuity

Long-running agents exhibit gradual behavioral drift that evades point-in-time testing. Context accumulates irrelevant information that crowds out relevant signals. Prompt templates degrade as edge-case patches create inconsistency. External APIs shift behavior subtly. These changes accumulate until agent behavior diverges significantly from validated patterns.

Continuous drift monitoring embeds agent outputs into high-dimensional semantic spaces and tracks distribution shifts over time. Statistical process control techniques adapted from manufacturing quality assurance detect when output distributions exceed normal variation bounds. The baseline distribution is established during pre-production validation; production monitoring flags movement away from this center.

Key insight from Section VAutomated correction strategies respond to detected drift. Mild drift triggers context pruning to remove accumulated noise. Moderate drift prompts template refresh from validated baselines. Severe drift initiates automatic rollback to the last known-good agent version while alerting engineering teams. This ensures user experience remains consistent while root cause analysis proceeds—the system degrades gracefully rather than failing catastrophically.

The Integration

These six subsystems form an integrated pipeline. Pre-production simulation tests agents in deterministically isolated environments using mocked tools and adversarial scenarios. Context engineering ensures multi-step workflows preserve critical information. Trace analysis detects silent failures before deployment. Production monitoring catches drift and enables graceful degradation.

The architecture follows a deliberate philosophy: catch failures at the earliest possible stage. Deterministic simulation catches reproducible bugs. Adversarial generation catches boundary violations. Context validation catches information loss. Trace analysis catches silent failures. Drift monitoring catches temporal degradation. Each layer provides defense in depth.

Key insight from Section VIThis approach is necessary because agent reliability cannot be achieved through better prompts alone, or better models alone, or better tool implementations alone. Agent failures emerge from the interaction of prompts, models, tools, context, and execution environment. The system must be evaluated as a system, under realistic conditions, before users encounter failures. That is the architectural principle underlying Fabrik.

Agent failures emerge from workflow-level interactions, not component-level bugs—testing in isolation misses the failure modes that matter.

Library-level tool interception preserves call semantics while enabling deterministic mocking, providing the optimal tradeoff for reproducible testing.

Schema-validated context structures prevent the degradation that causes most multi-step workflow failures.

Adversarial scenario generation reveals vulnerabilities that curated test sets systematically miss.

Trace-based analysis enables detection of silent failures that produce plausible but incorrect outputs.

Continuous drift monitoring catches temporal degradation before it impacts users, enabling proactive correction.